We have now launched a beta version of TombReader! This version is only available to a select group in the Allard Pierson museum. We will do extensive beta-testing and gather feedback to improve TombReader before releasing it to GooglePlay.

Almost there

TombReader design

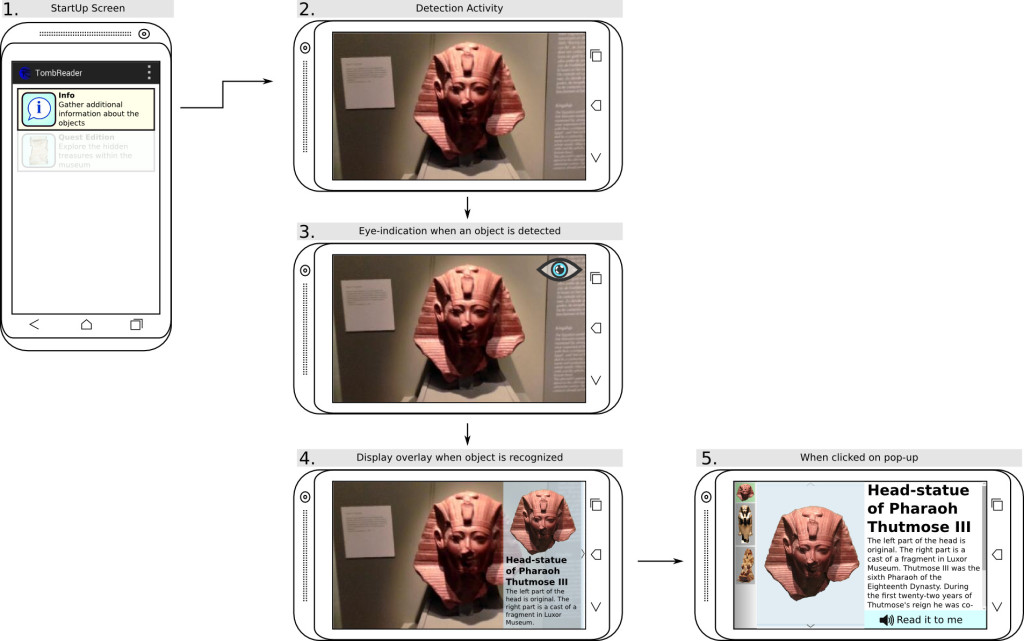

Since last post we have created an alpha-version of TombReader which we tested on several Android devices. This months progress has been mainly on optimizing the speed of TombReader to make it run smooth, while still being able to recognize the objects in real-time. The layout of TombReader can be seen in the image below

- Startup screen with the option to gather addition information about the objects, or to start the Quest edition (not yet implemented).

- Simply point the camera to an object to start the recognition.

- When TombReader sees an object, but is not yet sure what kind of object it is, it will display an eye-indication. Hold the camera still to complete the recognition.

- When TombReader is confident about the object in front of the camera, it will display an overlay with some of the additional information.

- When clicking on the overlay, all of the available information is shown, including related photo’s.

The following months I’ve been given the opportunity to develop another App in collaboration with the HvA. To take this job means that I will start working on this App for the following two months and won’t be able to develop TombReader as actively as I used to. But don’t worry, TombReader will be released before Christmas!

Milestone Reached

This month one of the major milestones have been reached: being able to recognise objects with a smart phone. While it was previously only possible to recognise the object from my own laptop, I now have successfully ported all of the detection algorithms to an Android App, which can distinguish the 29 objects from the Allard Pierson museum. A simple demo has been created which indicates what type of object is in front of the camera.

Towards building the App

Therefore, most of the research is finished, meaning that I can now fully focus on actually building the App!

First month

It’s been one month already, and during this time I’ve collected a dataset from the objects found in the Allard Pierson museum. This dataset consists of pictures from the objects from all angles, and is used to train the computer such that it will be able to recognise these objects when you take a picture of them.

Based on the objects from the Allard Pierson museum we can conclude that it will be much more beneficial to move away from the individual hieroglyph recognition, and focus more on detecting the objects as a whole. This brings a few advantages such as a higher classification rate and real-time classification, which means that the App will recognise the object as soon as it appears in front of the camera.

Currently, one of the most promising methods for this task is based on an Artificial Neural Network, which tries to mimic the behaviour of real brain cells. Much research has been conducted in this particular field of study, as a biological brain is still the most advanced phenomenon when it comes to recognising visual input.

First week

As of this week, I have officially started working full time to make TombReader a reality!

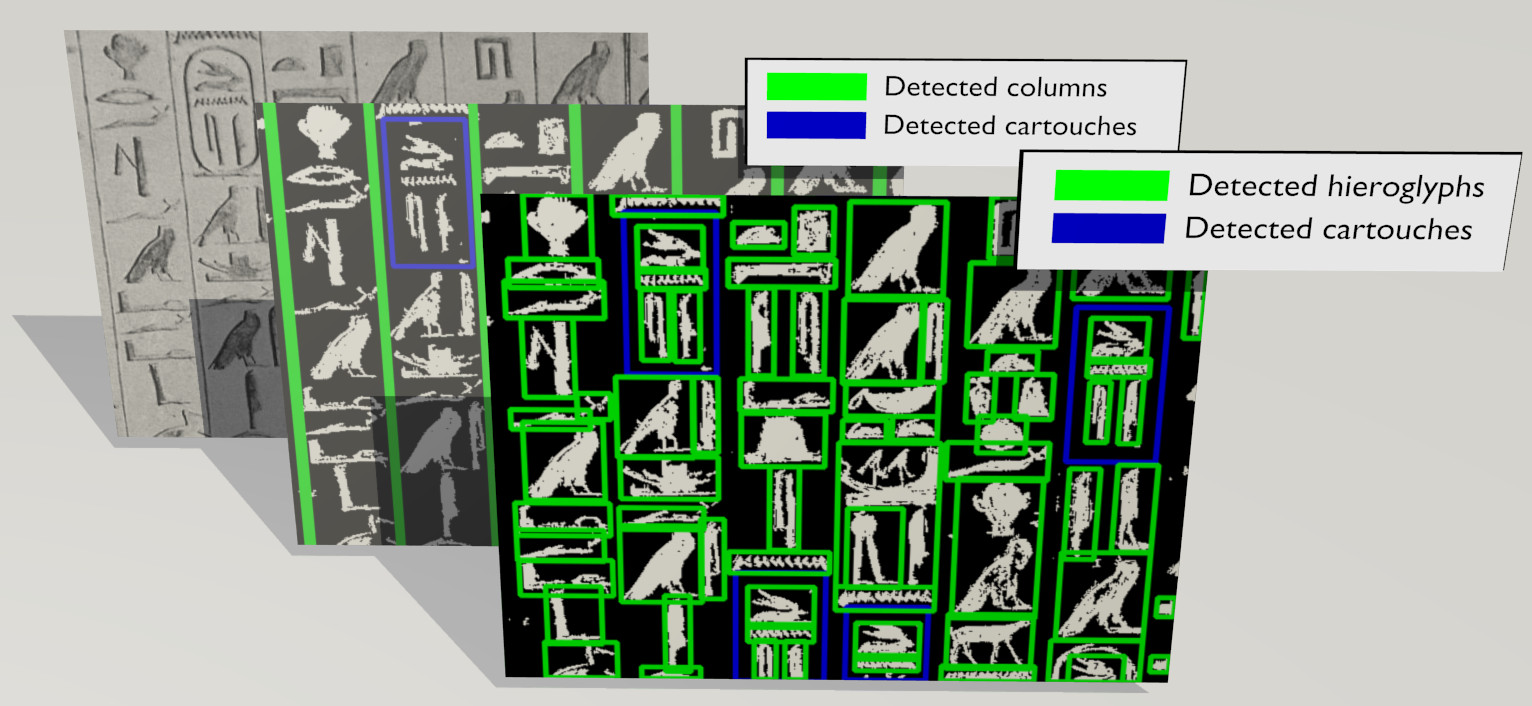

First course of action: improve detection, which is the ability to localize hieroglyphs in a photo prior to knowing what the type is. This part will also take care of finding the columns along which the hieroglyphs are written, as well as finding the cartouches that depict the name of a Pharaoh. The most difficult part of hieroglyph detection is to separate clustered hieroglyphs from each other, but with the use of clever algorithms, the computer will be able to differentiate between the hieroglyphs close to each other. Here is a quick preview of the current state:

Order:

– Raw input image

– Detection output + found columns and cartouches (a cartouche is the frame around a royal name)

– Hieroglyph localisation